Towards a Framework for the Certification of Reliable Autonomous Systems

Discussion Summary

Below, is a summarized interview article based on the video interview.

Your paper that you participated in proposes a framework for the certification of reliable autonomous systems. And the paper involves scientists from many countries – the United Kingdom, Italy, U.S., Germany, New Zealand, and Lebanon. How is this team assembled and why is this international participation important?

Well, we have in Germany a wonderful institution called the Dagstuhl Castle, which organizes seminars which usually involves the international community. There was a seminar on autonomous systems and within this seminar, we formed working groups on specific topics. Within the working group I was involved with, we decided to provide the results of our discussions into one paper and to publish it at a conference.

What is a reliable autonomous system?

There are two notions that need to be defined here. One is, of course, the notion of reliability and the other is of autonomy. An autonomous system is one that works without human intervention. A system is reliable if it provides a functionality whenever it is necessary.

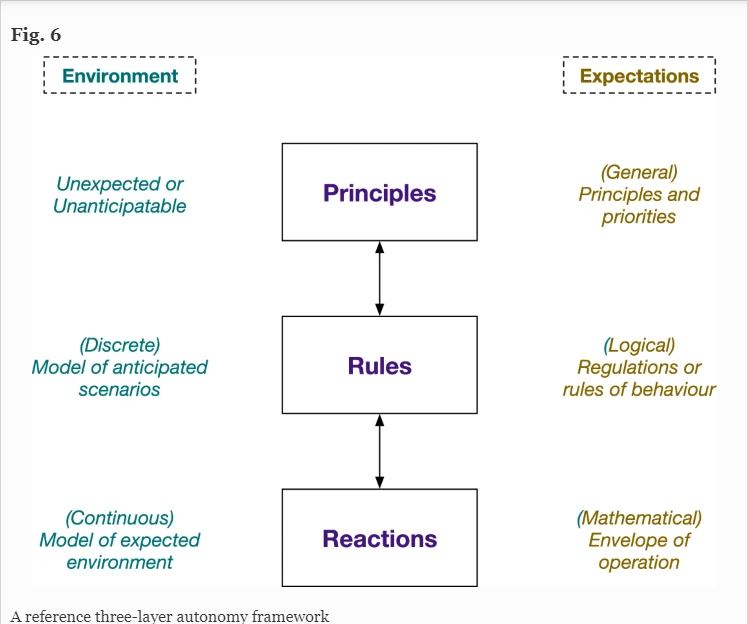

Your team proposes the three-layer autonomous framework of: Reaction, Rules and Principles to deal with these challenges. How did you come up with this model and why do you think the model is robust?

The lowest layer is the Reactions layer. that would mean the direct reactions to a sensory input. As an example, think of, let’s say, a vacuum cleaning robot which hits some floor, and then it turns around and moves away. Then there is the Rules layer, learning how to follow rules. In the vacuum cleaning robot example, a rule would be that it tries to cover the whole floor and vacuum the whole floor. When driving a car there are also traffic rules. Above these two layers is another layer, which we as humans, would say is that of natural intelligence (Principles). Imagine a self-driving car coming to a roadblock where it needs to pass to the other side of the road to be able to advance at all.

You also point out the many, many challenges in research, engineering and regulatory that need to be resolved. Can you amplify on those challenges and how they might be overcome?

For me, the most important question is how do we even specify what our moral and responsible behaviour is? Normally once we have specified things, we can try to reason about it and put this into programs and the engineers need to make this transformation. But for autonomous systems there are no such practices yet established. There are no standards and procedures which regulators have to follow. So, this is a political discussion as well.

How would standards and regulations keep up to this market reality?

We are in a real concrete danger, that so-called industry standards emerge that push systems into the market, which the society does not want. Regulators are called into action here to come up with standards and methods to validate those systems are according to human needs and not for industrial needs.

Different countries have different rules so to get to the certification of reliable autonomous systems, would that require some harmonization of regulations Internationally?

Probably, there would need to be international regulations. But, especially for this ethical layer, I think many principles are the same in different countries.

In the conclusion of your paper it states the autonomous vehicles should have self-defense capabilities against humans?

Of course, this sounds a bit provocative. The machine is always a slave. However, humans have the ability to sabotage these systems because they know that in the end, they might take away their workplace. The system, of course, should not have self-defense, but the machine should be able to record and collect whatever is happening in the environment.

What do you see at this point as the next step in the promotion and communication of this proposed framework for certification of reliable autonomous systems?

We need to have a discussion in society on what level of autonomy we would like to have. Self-driving cars are around the corner. What level of confidence do we need with these cars? What are the tasks we want to delegate to machines? And what is the role of humans, after all?

Holger Schlingloff is Chief Scientist of the System Quality Center (SQC) at the Fraunhofer Institute FOKUS and professor for software engineering at the Humboldt University of Berlin. His main interests are specification, verification and testing of embedded safety-critical software. He obtained his Ph.D. from the Technical University of Munich. He has more than 15 years of experience in testing of automotive systems, and has been managing industrial projects in the automotive, railway, and medical technology domain. He’s also worked with Tier one suppliers such as Bosch, Siemens, IAV, Carmeq and Panasonic. His areas of expertise include quality assurance of embedded control software, model-based development and model checking, logical verification of requirements, static analysis, and automated software testing.

Peter Watkins is the Chief Operating Officer at QA Consultants and is the current President of the World Network of Productivity Organizations. Peter previously worked as Executive Vice President and Chief Technology Officer for the McGraw-Hill companies, Executive Vice President and Chief Information Officer for the Canadian Imperial Bank of Canada, Global Leader for Financial Services for EDS, and National Practice Director for Information Technology for E&Y.